The Multi-Processor Computing runtime (MPC) is a unified runtime targeting the MPI, OpenMP and PThread standards. One of its main specificity is the ability to run MPI Processes inside threads, being a thread-based MPI.

More information here: http://mpc.hpcframework.com

InHP@ct is a seminar-hosting initiative taking place at the Ter@tec Campus, encouraging people to share their HPC knowledge with an audience composed of experts and HPC technology aficionados. Workshops held at Ter@tec are also accessible through this resource.

For more information: http://inhpact.hpcframework.com

MALP is an online performance tracing tool aiming at overcoming common file-system limitations by relying on runtime coupling between running applications.

Compact views of parallel application behavior can be generated. Thanks to its web interface and interactive visualizations, a better understanding of its MPI behavior at scale is at your fingertips.

For more information: http://malp.hpcframework.com

Parallel Computing Validation System is a Validation Orchestrator designed by and for software at scale. No matter the number of programs, benchmarks, languages or tech non-regression bases use, PCVS gathers in a single run, and, with a focus on interacting with HPC batch managers efficiently, run jobs concurrently to reduce the time-to-result overall. Through basic YAML-based configuration files, PCVS handles more than hundreds of thousands of tests and helps developers to ensure code production is moving forward.

While PCVS is a validation engine, not providing any benchmarks on its own, it provides configurations to most widely used MPI/OpenMP test applications (benchmarks & proxy apps), constituting a 300,000+ test base, offering a new way to compare implementation standard compliance.

For more information: https://pcvs.hpcframework.com

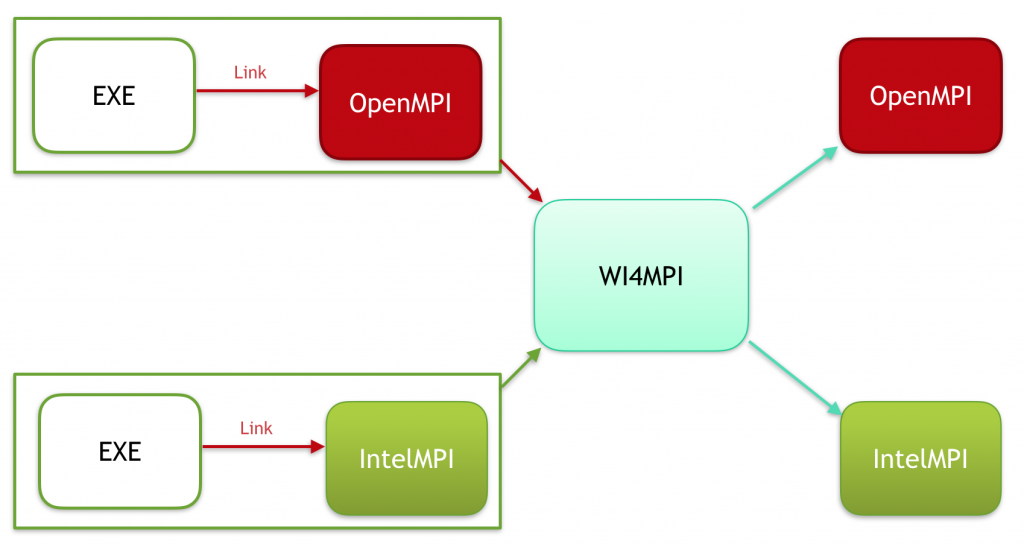

WI4MPI is a light translation framework between MPI constants and MPI objects from an MPI implementation to another one. Through two differents approaches (preload or interface), WI4MPI aims to leverage the adherence to a single MPI implementation between application compilation and runtime.

For more information: https://github.com/cea-hpc/wi4mpi